Problem

I wanted an easy way to keep track of recipes I’ve cooked before and liked, so I can quickly

find something next to cook.

Solution

So, I built a Vue/Express app that lets me manage such recipes. The recipes could be sourced

from many different sites, they could be in different languages and use different ingredient

measurement units, as well as different presentation formats. But I wanted a uniform way to

record and keep track of them. So, I decided AI is the right tool for this, and chose OpenAI

as the provider.

To handle such conversion, I built a Converter class in my TypeScript/Express API:

1 | () |

That openAI parameter is an instance of the OpenAI client from the openai Node package.

It is marked with the @inject(...) annotation because I’m using TSyringe for dependency

injection in the API project - but an OpenAI instance could also just be created inline in

case I didn’t use any inversion-of-control patterns.

To set up the OpenAI client, I use this code:

1 | export class DiStartup { |

Configuring ARBIO rules

As you can see, I only pass in the API key to initialize the OpenAI instance, but there are more options that I can use.

One such option is baseURL - this can be used to point the OpenAI library to any

compatible URL.

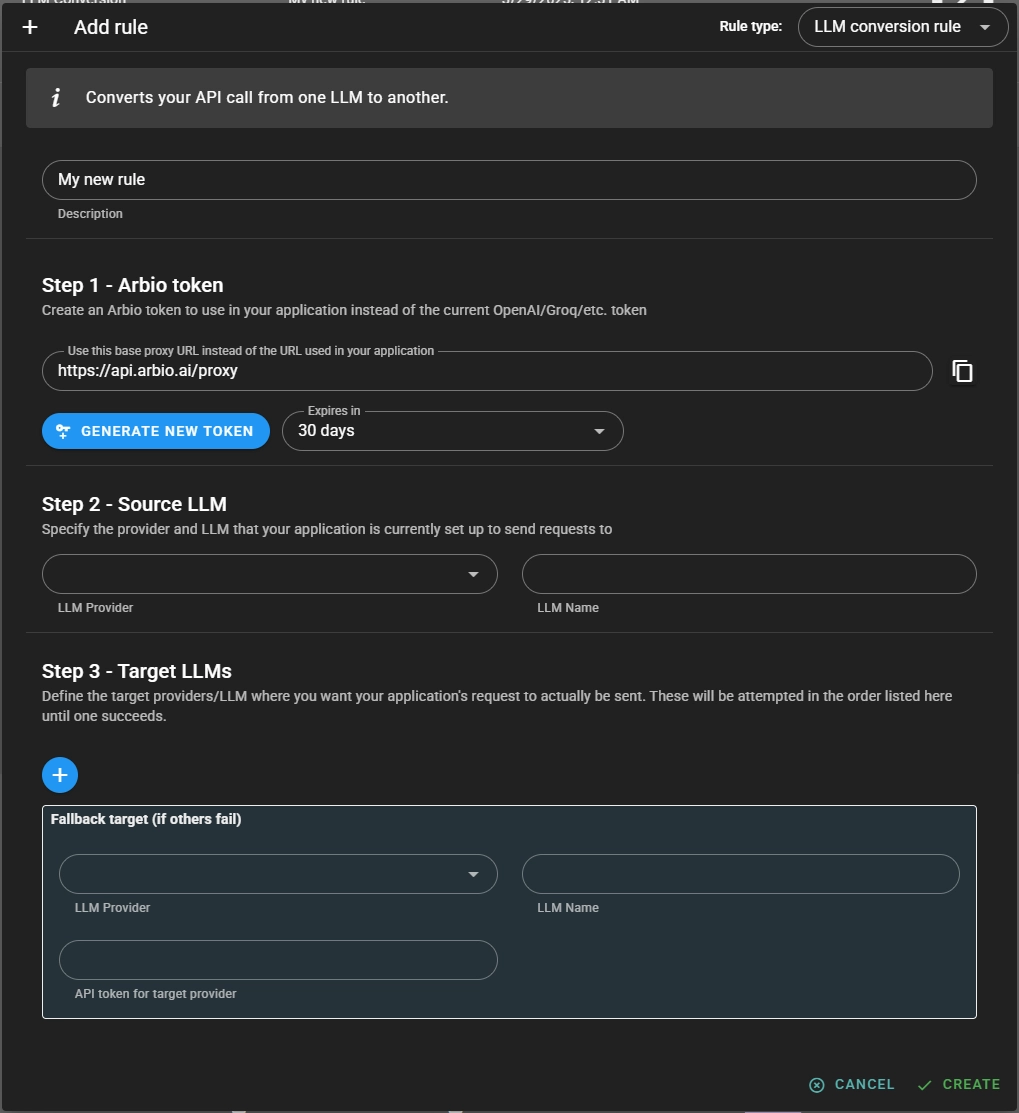

To point the library to Arbio, we need to specify a different baseURL and an Arbio-generated API token. For both of these things, I logged into https://app.arbio.ai, navigated to the Routing Rules on the left, and created a new rule by clicking the + button at the bottom.

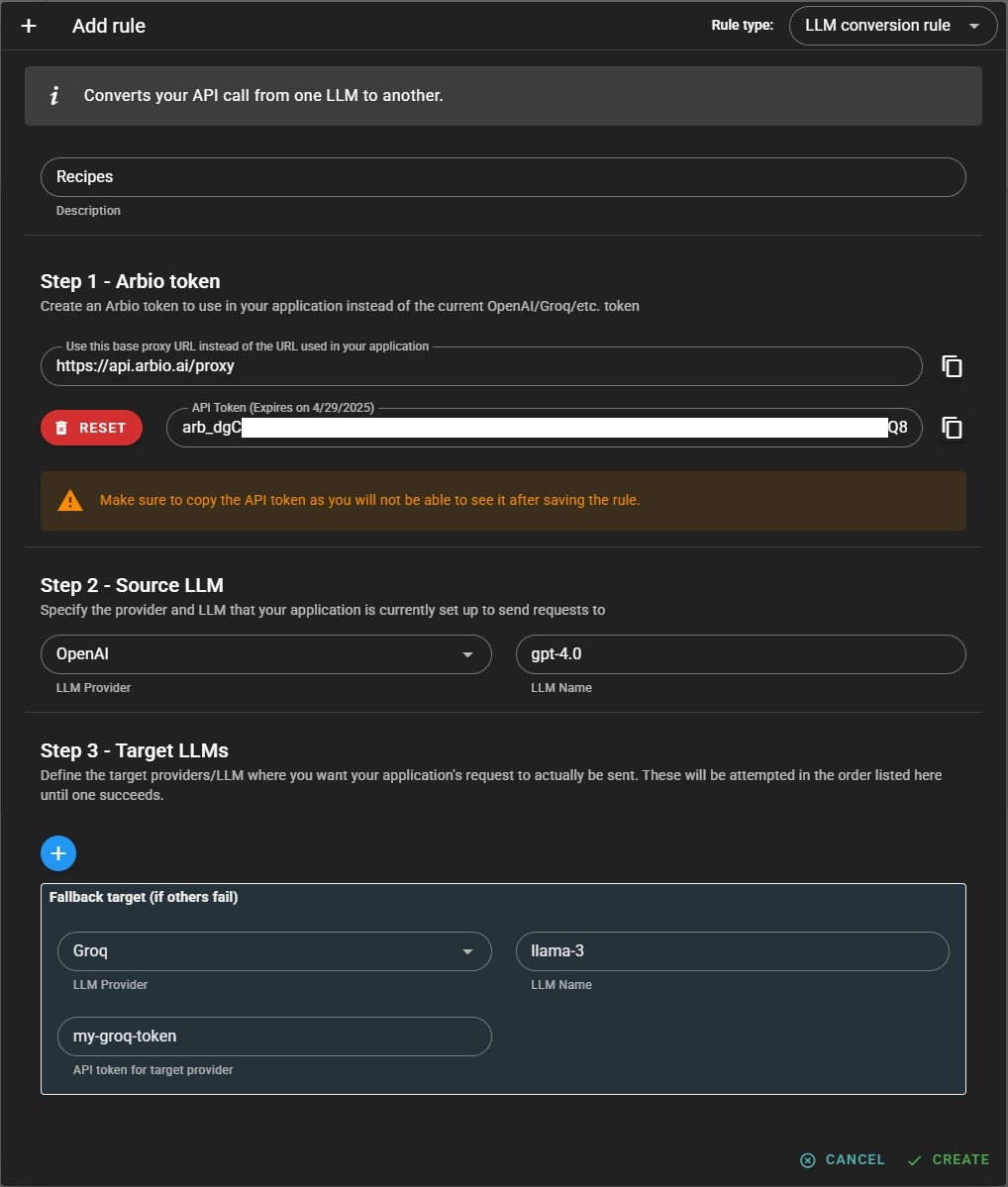

I filled in the name and generated a new token in Step 1, then in Step 2, selected OpenAI as the LLM Provider and typed in “gpt-4.0” as the LLM Name - that’s the model tell my prompts to use in my recipe app.

After this, in Step 3, I chose Groq as the LLM Provider, and typed “llama-3” as the model name - this is where I want my requests to actually be sent from the recipe app now.

Then, back in the recipe app, I modified the initialization of the OpenAI instance by replacing the apiKey and the baseURL with the values I copied from Arbio Rule setup in Step 1:

1 | ... |

Results

After this, any request to the recipe app makes is routed to Arbio, which re-routes it to Groq’s llama-3 model. This approach can be used to route requests to any supported provider and model. And, I can easily change models in the Arbio UI, without changing my application.